2009-12-24

Security Questions

Back in the day, it was always one thing in particular: "Mother's maiden name?" Obviously, only you will know that, because it's not important for anything. Well... except that NOW it's important because it got used everywhere as a security question. So every bank I dealt with knows it because they required it for me to do business with them.

So now that's been basically dropped, and a whole slew of other security questions have popped up. "Mother's date of birth?" "Childhood pet's name?" "Where did you go on your honeymoon?" (These are are all actual examples.) Obviously good security questions because no one would want to know any of this trivia.

HEY SECURITY DUMBASS -- AS SOON AS YOU ASK THIS QUESTION IT BECOMES OF INTEREST TO AN ATTACKER, AND THEREFORE A SECURITY VULNERABILITY.

What really pisses me off is that over time, these financial and business sites are going to know every scrap of personal information about my life if this goes on. All my relatives' and friends' birthdays. Nicknames and pets, favorite books/ authors/ places I dream of vacationing, etc., etc., etc. Every time one becomes somewhat widespread, they have to switch to something even more esoteric and private.

Nowadays I'm running into multiple sites (that I've used in the past) that are refusing to allow me access unless I give them some new tidbits of "security question" information. The nice girls at my local bank see my distress and helpfully suggest "Just make something up!" Which has the disadvantages of (a) now I'm not going to remember it and need to write it down, and (b) the fine print of the terms-of-service demand honest and factual information, and while I'm sure the tellers at the bank don't mind, I'm equally sure that the corporate entity will be happy to crucify me over a transgression like that if we ever get into a dispute.

Fuck that.

2009-12-11

Disk Icons

2009-11-14

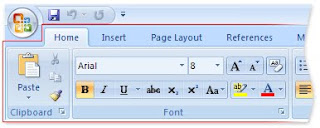

MS Office Ribbons

So for the first time the computer literacy class I teach has been forced to switch over to MS Office 2007 products, and hence this past week I was finally forced to use the MS Office Ribbon interface. I tried to stay away from it, but now here it is finally. I really don't like it.

Here's the thing I really don't like about (aside from just the radical change from anything that's come before): It's semi-impossible to describe to someone else (say, a student) where they should be clicking.

In some sense, this is basically the primary disadvantage of the GUI having been doubled in intensity. With a command-line interface, it's easy to write out your instructions and transmit them in writing or verbally to another person. The GUI makes that piece of business a lot harder (now the geographic location that you're clicking on makes a big difference, and you wind up clumsily describing the pictures and icons that you're trying to activate).

So with the MS Office Ribbon, this is even more exacerbated. At least with the traditional menu bar, there was a linear order to each step of a process. Click on one menu item, another linear list drops down, find one item in that list, proceed to the next, etc. For example, to center the contents of a cell in Excel, I could provide a handout that says, "Click on: Format > Cells > Alignment > Horizontal > pick 'Center'". But now, I have to say something like "On the Home Tab, find the Alignment section, and kind of near the middle of that section there's a button that kind of has its lines centered, click on that". Very, very clumsy... and more so for lots of other examples that we can probably think of.

2009-10-08

Programming Project Idea

This would be more advanced than anything I've done in my classes, even though some of the programs could be relatively short. Interesting both for basic programming skill and insights on password security. Maybe seed the list with some instructor-made weak passwords as a baseline target.

2009-07-29

Game Theory Lectures

However, I keep being reminded that this is a course being given in Yale's Economics department. And I've long held a few very key critiques about the foundations of standard economic theory, that I feel make the entire enterprise miserably inaccurate. What I didn't expect is for these Game Theory lectures to feature a high-intensity spotlight directly on those shortcomings, in practically every single session.

Critique #1 is that economics deals only with money, and wipes out our capacity to deal with other values. Critique #2, probably more important, is that economics fatally depends on a “rational actor” assumption for all involved, which is simply not true. Let's consider them in order:

Critique #1: Economic theory is all about money, and the widespread use of the theory destroys our other values like family, community, craftsmanship, healthy living, emotional satisfaction, and good samaritanhood. As one wise man said, “They don't take these things down at the bank,” and therefore, they get obliterated when economic theory is put in play.

Now, Professor Polak makes a good, painstaking show in Session #1 of trying to fend off this criticism. “You need to know what you want,” he says, and runs an extended example wherein, if one player really was interested in the well-being of his partner in a game, well, that could be accounted for by assessing the value of those feelings, and adding/subtracting to the payoff-matrix appropriately, and then running the same Game Theory analysis on the new matrix, finally arriving in a different result. (Of course, along the way he also snidely refers to this caring player as an “indignant angel"). See? Game Theory can handle all kinds of different values, not just money.

But lets look later in the same lecture, where he has the students play the “two-thirds the average game” (more on that later). He holds up a $5 bill and says that the winner will receive this as a prize. Now – does he do the analysis of “what people want” (payoffs), which he just said was so keenly important? No, he does not. Only 15 minutes after this front-line defense, it goes forward without comment, that obviously the only value for anyone in the game is the money. So, even though we just lectured on how economic theory can handle different values, we immediately thereafter turn around and act out exactly the opposite assumption. Maybe some people want the $5... maybe some want to corrupt the results for their snotty know-it-all classmates... But no, we get it played out right before our eyes, immediately following the defense that “all values can be handled”, that as soon as money comes in the picture, in practice, we dispense of all other values and speak of nothing except for the cash money.

Critique #2: Economic theory presumes “rational players”, where all the people involved knowingly work to their own best interests all the time. Frankly, that's just downright absurd. People are routinely (1) uneducated or uninformed about what's best for themselves, (2) barred from receiving key information by more powerful institutions or interests, (3) obviously non-rational in instances of emotional stress, drug use, mental failures, and modern Christmas purchasing behavior, and (4) proven by cognitive brain science to be unable to correctly gauge simple probabilities and risk-versus-reward.

Now, consider lecture #1, where Professor Polak introduces game payoff matrices, and the idea of avoiding dominated strategies (that is, a strategy where some other available choice always works out better). With exceeding care, he transcribes each “Lesson” along the way onto the board, including this one: “Lesson #1: Do not play a strictly dominated strategy”. Okay, that's a reasonable recommendation.

But about 10 minutes later, he pulls a devious sleight-of-hand. Analyzing another game, he asks what strategy we should play. “Ah,” he says, “Notice that for our opponent strategy A is dominated, so you know they won't play that, they must instead play strategy B, and thus we can respond with strategy C.” Well, no, that reasoning about our opponent (the 2nd logical step here) is completely spurious; it only make sense if our opponent is actually following our lesson #1. But, have they taken a Game Theory class? Do they know about “dominated strategy” theory? Do they actually follow received lessons? None of those things are necessarily (or even likely, I'd argue) true.

In other words, he assumes that all players are equally well-informed and “rational”, which isn't supportable. And, this assumption is kept secret and hidden. It would even be one thing if Professor Polak came out and said “For the rest of our lectures, let's also assume that our opponents are following the same lessons we are,” but no, he quite scrupulously avoids calling attention to the key logical gap.

And he does is it again, even more outrageously, in Session #2, when analyzing the class' play of the “two-thirds the average game” (a group of people all guess a number from 1-100; take the average; the winner is whoever guessed 2/3 of that average). He has a spreadsheet of everyone's guesses in front of him. Speaking of guesses above 67 (2/3 of 100), he says, "These strategies are dominated – We know, from the very first lesson of the class last time, that no one should choose these strategies." Except that, as he points out mere seconds later, several people did play them! (4 people in the class had guesses over 67; this occurs 46 minutes into lecture #2.) Nontheless, he continues: "We've eliminated the possibility that anyone in the room is going to choose a strategy bigger than 67...". But how can you possibly contend that you've “eliminated the possibility” when you have hard data literally in your hand that that's simply not true? Answer: It's the “rational player” requirement of all economic theory, which demonstrably collapses into sand if the logical gap is recognized and/or refuted. This infected logic continues throughout the class; in sessions #3 and #4 he repeats the same goose-step in regard to "best response" (1:10 into lecture #4: "Player 1 has no incentive to play anything different... therefore he will not play anything different."), and so on and so forth.

2009-07-27

Essay on Time Management

http://www.paulgraham.com/makersschedule.html

In brief -- Managers work in hour-long blocks through the day; great for meeting people and having a friendly chat. Makers, however (writers, artists, programmers, craftsmen) work in half-day blocks at the minimum. Interfacing the two -- e.g., managers calling an hour-long meeting at some random open slot in their schedule -- cause the makers to completely lose the in-depth concentration on a task they require. Call this "thrashing" or "interrupts" or "exceptions", if you like. This blows away a half or a full day of productive work when it happens.

Great observation, and it rings extremely true in my own experience. One of the reasons I'm so happy to be outside the corporate environment these days.

2009-06-05

Jury Selection

I was confused and mystified by this for a while. We put our heads together with my friend Collin, and I think we finally stumbled into an explanation.

The point is this: Everyone wants to avoid a hung jury (that is, a mistrial, forcing the court & lawyers to try the case all over again another time). The way a jury really works behind the scenes in a criminal trial is that you start with some yes-votes and some no-votes, and over the course of a day or so one side simply batters down the resistance of the other (often through insults and intimidation, as witnessed by another friend), until there is finally a unanimous vote. And who could possibly interrupt this process? You guessed it, the rare personality type who is willing to reject the mob mentality and stand out, disagreeing with everyone else in a crowded, public courtroom.

It seemed odd to me that when we disagreed with the rest of the pool like this, both the prosecution & defense got all jumpy with us about it. You would think (from an expected-value analysis) that if you asked a defense attorney the question, "Which would you rather have as a result of a trial: a conviction or a mistrial?", the answer would be "a mistrial" (since there's at least some probability that your client is found innocent in the next trial). But now I'm guessing that this fails to take into account the opportunity-cost to the attorney in their time; possibly they would actually, ultimately prefer the conviction, and be able to move to other more promising cases, rather than re-try a case which apparently is not a good cause in the first place. (This is similar to the well-known disconnect in incentives between a house seller and the broker working on a commission.) They're not making this loudly known, but I now suspect that avoiding a hung jury may be priority #1 for all the lawyers and judges in selecting a jury, even beyond winning the actual case. Therefore, the able-to-disagree-alone-with-a-room-full-of-people personalities have got to go.

For those of you who want to get out of jury duty, I therefore give a simple, completely foolproof and hassle-free procedure. There's absolutely nothing difficult about it and requires no creativity. Simply pick something, anything in the questions and disagree with everyone else, and you will be immediately released. If you're honest, in fact, it's practically impossible not to do this.

2009-05-24

Grading On a Curve Sucks

Back in Fall 2006 Thought & Action magazine published an article by Richard W. Francis (Professor Emeritus in Kinesiology, California State Fresno), asserting that grading on a curve is the only way to properly compute grades (titled in a propagandist fashion, "Common Errors in Calculating Final Grades"). Here's my letter to the editor from that time:

-------------------------------------------------

Dear Editor,

Richard W. Francis proposes a system for standardizing class grading (Thought and Action, Fall 2006, "Common Errors in Calculating Final Grades"). The system takes as its priority the relative class ranking of students, even though I've never seen that utilized for any purpose in any class I've been involved with.

Mr. Francis responds to some criticism of his system effectively grading on a curve. His response is that instructors can "use good judgment and the option to draw the cutoff point for each grade level, as they deem appropriate". In other words, after numbers are crunched at the end of the term, the grade awarded is based on a final, subjective decision by the instructor. Moreover, there is no way to tell students clearly at the start of the term what is required of them to achieve an "A", or any other grade, in the course.

The example presented in the article of a problem in test weighting seems unpersuasive. We are presented with a midterm (100 points, student performance drops off by 10 points each), and a final exam (200 points, student performance drops off by 5 points each). It is presented as an "error" that the class ranking matches the midterm results. But since the relative difference in the midterm is so large (10% difference each step) and the final so small (2.5% difference each step; even scaled double-weight that's only 5% per step) this seems to me like a fair end result.

Take student A in the example, who receives an "A" on the midterm and a "C+" on the final (by the most common letter grade system). In the "erroneous" weighting he receives a final grade of "B", while in the standardized system he has the T-score for a "D+". Clearly the former is the more legitimate reflection of his overall performance.

As an aside, I have a close relation who was denied an "A" grade in professional school due to an instructor grading on the curve. He still complains bitterly about the effect of this one grade on his schooling, now 40 years after the fact. Any subjective or curve-based system for awarding student grades at the end of a term damages the public esteem for our profession.

Daniel R. Collins

Adjunct Lecturer

Kingsborough Community College

2009-05-23

Winning Solitaire?

Most games are lost, but I can usually eke out a win in about 20-30 minutes of playing. However, just today I probably lost 30+ games in a row over maybe 2 hours. Still no win so far today. I have to be careful, because I get in a habit of quickly hitting "deal" instantly after a loss (my "hit", if you will), and after an extended time by hand starts to go numb and I start making terrible mistakes because my eyesight starts getting all wonky. (Is it fun? No, I feel a vague sense of irritation the whole time I'm playing, until I actually win and can finally close the application. Hopefully.)

So this brings up the question: What percentage of games should you be able to win? Obviously I don't know, but my intuition says around ~20% or so maximum. I'm also entertaining the idea of building a robot solver, improving its play, and seeing what fraction of games it can win. Apparently this an actually outstanding research problem; Professor Yan at MIT wrote that this is in fact “one of the embarrassments of applied mathematics” in 2005.

The other thing is that all of the work done on the problem apparently uses some astoundingly variant definitions for the game. First, the "solvers" that I see are all based on the variant game of "Thoughtful Solitaire", apparently preferred by mathematicians because it gives you full information (i.e., known location of all cards), and are therefore encouraged to spend hours of time considering just a few moves at a time (gads, save me from these frickin' mathematicians like that! Deal with real-world incomplete information, for god's sake!).

Secondly, they use the results from this "Thoughtful Solitaire" (full information, recall; claiming 82% to 91% success rate) simultaneously for the percentage of regular Solitaire games that are "solvable". But this meaning of "solvable" is only a hypothetical solution rate for an all-knowing player; that is, there are many moves during a regular game of Solitaire that lead to dead-ends, that can only be avoided by sheer luck, for the non-omniscient player. If they're careful the researchers correctly call this an "upper bound on the solution rate of regular Solitaire" (and my intuition tells me that it's a very distant bound); if they're really, really sloppy then they use the phrases "odds of winning" and "percent solvable" interchangeably (when they're not remotely the same thing).

So currently we're completely in the dark about what the success rate of the best (non-omniscient) player would be in regular Solitaire. I'll still conjecture that it's got to be under 50%.

Edit: Circa 2012 I wrote a lightweight Solitaire-solving program in Java. Success of course varies greatly by rule parameters selected: for my preferred draw-3, pass-3 game it wins about 7.6% of the games (based on N = 100,000 games played; margin of error 0.3% at 95% confidence). My own manual play on the MS Windows 7 solitaire wins over 8% (N = 3365), so it seems clear that there's still room for improvement. See code repository on GitHub for full details.

2009-05-15

Expected Values

I've found that probability is enormously alien to a surprising number of students. (Just last week I had students in a basic math class fairly howling at the thought that they might be expected to be familiar with standard dice or a deck of cards). Therefore, I find that I actually have to motivate these discussions with an actual physical game, of the most basic simplicity. If I did cover expected values, here's the rudimentary demonstration I'd use:

The Game: Roll one die.

Player A wins $10 if die rolls {1}.

Player B wins $1 if die rolls {2, 3, 4, 5, 6}

Calculate probabilities (P(A) = 1/6, P(B) = 5/6).

Let a student pick A or B to play, roll die 12 times (say), keep tally of money won on board (use I's & X's). Likely player A wins more money.

Expected Value: The “average” amount you win on each roll.

E = X*P (X = prize if you win; P = probability to win)

Calculate expected values.

Ex.: Poker situation.

If you bet $4K, then you have 20% chance to win $30K. Bet or fold? (A: You should bet. E = $30K * 20% = $6K. If you do this 5 times, pay $20K, expect to win once for $30K, profit $10K)

2009-05-11

Speaker for the Dead

Of course, I loved Ender's Game. This second book is possibly even more emotionally moving in places (and Card seems to have said he considers it to be the more "important" book to him), but there's a number of notable structural flaws that I'm not able to shake off.

First is that it's very much working to set up further sequels; there's a whole number of major plot threads left hanging, and you can start detecting that about halfway through the book (furthermore, I see now that both this and Ender's Game were revised from their original format, so as to set up sequels, which takes away from the narrative thrust at the end of each). Second is that there's a central core mystery that the whole book is set up around, and in places people have to be unrealistically tight-lipped to their closest friends so as to prolong the mystery (I got really super-sick of this move from watching Lost). Third is that the central theme seems like a rehash of Ender's Game (you can very much feel Card wrestling with the rationale to the plot of Ender's Game; you can almost hear him musing "why would an alien race feel like killing is socially acceptable or necessary, anyway?", a central premise of the first book, and then constructing this second book so as to have an actual satisfying reason). There's also some obvious clues that the aliens should have been able to pick up when they kill humans (namely the visually obvious results of the "planting", as witnessed at the end of the book), that would have told them it's a good idea to stop doing such a thing, but apparently they miss them entirely.

But fourth is something that bothers me about lots of science fiction. Although the story spans many years, by way of relativistic time travel (over 3 thousand years, actually), technology never changes during that time. Ender can set off on a 22-year space flight, and when he lands, apparently all the exact same technology is in use for communications, video, computer keyboards, record-keeping, spaceflight landing, government, publishing literature, etc.

In fact, I've never seen any science-fiction literature that manages to deal with Moore's Law (the observation that computing power doubles every 2 years or so). It would be one thing if they conjectured that "Moore's Law ended on date such-and-such because of so-and-so...", but it's always a logical gap that's completely overlooked. Ender is honored to be given an apartment with a holoscreen with "4 times" the resolution of normal screens... but I'm thinking, in 22 years time, the resolution of every screen should be 1,000 times the ones he left behind on his space-flight. At that rate, I wouldn't bother walking into the next room for one with only "4 times" the resolution.

Maybe that's a subject that is simply impossible to treat properly in a work of centuries of science fiction, but the repeated logical gap (in the face of our own monthly dealings with new technologies) is something that's bothering me more and more. Maybe the Singularity will come and solve this problem for us once and for all.

2009-04-21

Forecast: Hazy on Probability

If, for example, a forecast calls for a 20 percent chance of rain, many people think it means that it will rain over 20 percent of the area covered by the forecast. Others think it will rain for 20 percent of the time, said Susan Joslyn, a cognitive psychologist at the University of Washington who conducted the study.

Of course, how the article should really be titled is simply "Probability is Misunderstood by Many". I see the same -- dare I say stunning -- difficulty that enormous numbers of college students have in interpreting the most basic probability statements. Unfortunately, in the classes that I teach probability is never more than a quick two-week building-block on the way to something else (either in a survey class, fundamentals of inferential statistics, etc.). Part of me wishes we could give a whole semester course in probability (and basic game theory?) to everyone, but I know there's no room for that in the basic curriculum.

Having seen the difficulty, I've tried to emphasize the interpretation process more in later semesters, and tend to run into more and more resistance against it. Even students who are in the habit of happily crunching on formulas and churning out numerical solutions can be vaguely frustrated and unhappy at being asked what the numbers mean.

This is one where I find it really hard to empathize with the students on the issue (that being rare for me), and I almost can't begin to imagine where I need to start if I get an incredulous response as to how I knew that 75% was "a good bet" if I mention that in passing. Perhaps just growing up in an environment where I was personally steeped in games as recreation every day for decades (chess, craps, Monopoly, Risk, poker, D&D -- see here for more) marinated the fundamental idea of probability into my brain in a way that can't be shared in a class lecture.

Anyway, some people have suggested that statistics needs to be taught to everyone functioning in a modern society. Even more fundamental (after all, it's foundational to statistics) would be getting the majority of people to have a sense for probability in their gut, because most people currently do not. I'd hypothesize that psychological experiments like those at U. Washington have promise of cornering the precise way that our brains are fundamentally irrational -- that math so simple could be so bewildering in practice, suggests a deep limitation (or variant prioritization) in our cognitive abilities.

2009-04-20

Blogging as Software Development

I'm finding that with the advent of electronic publishing/easy blogging, I'm doing the same thing with my writing. I frequently post something and then go back -- hours or days or weeks later -- re-read it, and make minor (but occasionally numerous) changes to the grammar, sentence structure, and so forth. Sometimes I add in a new anecdote, analogy, or quote that I've come up with in the meantime.

Now, once upon a time we all had to do this the other way around. When publishing was entirely by print -- fixed and labor-intensive -- then ideally you'd write, draft, revise, edit, etc., prior to the final "official" version being published and observed by any readers. (Back when I was a high-school student working on an actual typewriter, I would personally skip the draft/revise process, but I'd take a long time mentally picturing each paragraph and sentence before I put it on the page. Excepting that time I was writing a paper in the morning, last minute, with my grandfather sitting on the stairs waiting to drive me to school.) The point being, what would our older teachers think if we told them that we could entirely reverse the process -- publish our rough draft first, and then instantly add any revisions we wanted, while people were reading and responding to what we had written?

I'm finding that it's a lot healthier for me, now that blogging software is widely available, to reverse the process in exactly this way. I get my stuff out in the world and get some kind of feedback almost immediately. I can get in the flow of the writing/thinking process without getting interrupted too much by the need to stop and pull out a dictionary or a thesaurus. I can put the draft out there and only come back to it if I truly have a really good idea to add or modify what I've written later on. It feels almost like publishing was just waiting to be done this way for the entire history of writing.

2009-04-09

On Classroom "Contracts"

----------------------------------------------

In the Fall 2008 Thought & Action magazine, professor P.M. Forni had an article called "The Civil Classroom in the Age of the Net". Within that article, he recommends a commonly-seen tactic referred to as a "contract" or "covenant" with the students in the class. Professor Forni writes (p. 21):

Read the covenant to your students on the first day of classes and ask them whether they are willing to abide by it. You can certainly make it part of the syllabus, but if you prefer a more memorable option, bring copies on separate sheets. Then, after the students' approval, you will staple the sheets to the syllabi just before distributing them to your class. Either way, it is of utmost importance that you do not change the original stipulations during the course of the term.

Personally, I think this is one of the more corrosive practices that I've seen widely used in colleges these days.

First of all, the practice is morally ambiguous in that it demands agreement to something being called a "contract" without an opportunity for fair negotiation on both sides. If a student actually does not agree to the presented covenant, what then? In truth, the point of negotiation is when the student formally registers for the class. When instructors bully a classroom of students into a signing statement on the first day of class, we're giving a terrible lesson into the gravity and consideration they should take before signing their name to any document.

Secondly, there is a message usually delivered along with these "contracts" along the lines of, "the covenant is an ironclad agreement that can never be broken". That is again a misrepresentation of how contracts are actually used in the business world. Contracts attempt to establish principles of intent, but they are routinely re-negotiated and amended all the time. When a disagreement erupts between parties, the existing contract may be used as a starting point for discussions, but if agreement cannot be reached, then arbitration or a court case may result. If this were not so, then the entire field of contract law would not exist.

Thirdly, the common usage of these so-called "covenants" causes some students in classes where this is not used (such as the classes that I teach) to believe that without a signed contract, they have no behavioral or performance requirements whatsoever. Obviously this is not the case (again, it's really the moment of course registration in which they agree to abide by the professor's classroom policies), but I have seen it argued by students confused by the practice.

The classroom "contract" or "covenant" of behavior is a confusing, frankly deceptive practice, and it should be avoided by conscientious instructors.

2009-03-31

Review of "The God Delusion"

I've read several chapters of Dawkins' "The God Delusion" and I've got to say that it's disappointing. It's a worthwhile project ("consciousness raising" on why it's admirable to be an atheist), one that I've wanted to do myself in the past, but this doesn't quite fit the bill. Mostly it's a matter of style. It's simply to wordy; it's too discursive; it's too English. Dawkins seems unable to go more than a a single page without some lengthy outside quote; it feels like I just barely get into his train of thought before having to repeatedly jump into some other person's anecdote, poem, or metaphor. I used to take pleasure in nonstop tangents and wordplay like this, but I've found that my patience for it has died out.

I need something that's a bit more punchy, personal, and directly to the point. I would prefer a manifesto and we don't get that here. Dawkins clearly demonstrates a great deal of literary and cultural knowledge, but I find it altogether distracting. In addition, the foils that he's primarily skewering generally seem to be a batch of kindly, woolly-headed, liberal English archbishops, which seem like very faint opposition. Apparently one of the most common clerical responses that Dawkins hears is "Well, obviously no one actually believe in a white-bearded old man living in the sky anymore", which seems entirely off-topic to someone such as myself who lives in American society. He feels compelled to say things like "This quote is by Ann Coulter, who my American colleagues assure me is not a fictional character from the Onion," which again, is completely distracting and quizzical to the American reader.

I'll say this: Dawkins has great book titles. "The God Delusion" sounds like exactly something I'd been looking for, perhaps an explanation or theory of exactly why so many people's brains cling to religion. But frankly that's not what you find between the covers. The keystone Chapter 4 is titled, "Why There Almost Certainly is No God". That sounds compelling, and I could almost start sketching the chapter out in my head, using the modern statistical science of hypothesis testing as a model. But unfortunately the entirety of the chapter is taken up by Dawkins cheerleading for why the theory of natural selection is so great. Great it certainly is. But at best this chapter explains why God is unnecessary for the specific purpose of explaining the evolution of species. People use the idea of God for many, many purposes beyond that, and I think that a far more offense-directed argument needs to be made to fulfill the promise of Chapter 4.

Given Dawkins' focus on biological science and evolution, he has a razor-sharp sensitivity to arguments that "Such-and-such an organ is so complicated, it must have been designed by God"; he spends swaths of several chapters fighting them. Okay, that's a reasonable thing to be irritated by, but here's two observations. One is that I can summarize his argument in a single line. The response to any cleric's "What is the probability that organ X or universe Y could have appeared spontaneously?" should always be "Enormously greater than the probability that a sentient, all-knowing, omnipotent, thought-reading, personally attentive, prayer-answering God could have appeared by chance!" There, I just saved you about 3 chapters.

Secondly, I cannot help but take away the impression that we're fundamentally winning against such arguments. Dawkins makes a good point that a "mystery" to a scientist represents the starting point for an intriguing research project; whereas for a religious person it is a stopping point whose dominion must be reserved for God (historically, complete with threats of violence against exploration). But clearly the "God of the gaps" proponents are being pushed further and further back, perhaps even with greater velocity over time. Whereas previously they would point to organs such as an eye or wing as being impossible to evolve (and since having had the opposite be demonstrated), they have now, according to Dawkins examples, retreated to areas such as microbiology and the flagellum of bacteria. Presumably next will be quantum physics, and beyond that, some unidentifiable regress. My point here is that Dawkins' examples seem to take the emergency out of the issue, and at least from his focus on biological science, it seems like there's little we need to do to disprove God except to support ongoing biology research. I suppose that's good news, but I was looking for more of a direct call-to-action.

In Chapter 5 ("The Roots of Religion") Dawkins has some speculation on the question of "Why does religion exist?". To me, I felt like this was very specifically the promise of a book title "The God Delusion". But Dawkins has no specific thesis, he only has a loose collection of a half-dozen tentative speculations. The most tantalizing are the sections called "Religion as a by-product of something else" and "Psychologically primed for religion" (the centerpiece being, maybe children are mentally wired to implicitly trust what their parents say, so as to pass on key survival skills, and that leaves our species vulnerable to mind-viruses such as religion). It's an intriguing section, but it's short, Dawkins doesn't develop it greatly, nor does he stake out a specific position for it. My preference would be for him to have developed a specific, detailed thesis on the subject before presenting it in a book called "The God Delusion".

In summary: A commendable project, a great title, but a disappointing and distracting read for the American reader.

2009-03-20

The Oops-Leon Particle

http://en.wikipedia.org/wiki/Oops-Leon

In short, in 1976 Fermilab thought it discovered a new particle of matter, but turned out to be a mistake. It was originally called the "upsilon", but after the mistake was caught, it was referred to as the "Oops-Leon", in a pun on the lead researcher, Leon Lederman. I love that wordplay.

The other thing I love is that, like all modern science, the mistake is partly due to statistics, which we must understand as being based on probability. Looking at a spike in some data, it was calculated that there was only a 1-in-50 chance for it not to have been caused by a new particle (that is, a P-value). But with further experimentation it turned out that that was a losing bet; it actually had been some random coincidence that caused the data spike.

That's the kind of thing you need to accept when using inferential statistics; all the statements are fundamentally probabilistic, and some times you're going to lose on those bets (and hence so too with all modern science). Apparently the new standard before publishing new particle discoveries is now 5 standard deviations likelihood, or more 99.9999% likelihood that your claim is correct.

And you know what? Someday that bet will also be wrong. Such is probability; so is statistics; and hence so is science.

2009-03-06

PEMDAS: Terminate With Extreme Prejudice

So, now it's time for my official MadMath "Kill the Shit Out of PEMDAS" blog posting.

It's a funny thing, because I'd never heard of the PEMDAS acronym until I started teaching community college math. None of my friends had ever heard of it; artists, writers, engineers, what-have-you, from Maine or Massachusetts or Indiana or France or anywhere. But for some reason these urban schools teach it as a memory-assisted crutch for sort of getting the order of operations about halfway-right (PEMDAS: Parentheses, Exponents, Multiplying, Division, Addition, Subtraction.)

But the problem is, it's only half-right and the other half is just flat-out wrong. Wikipedia puts it like this ( http://en.wikipedia.org/wiki/Order_of_operations ):

In the United States, the acronym PEMDAS... is used as a mnemonic, sometimes expressed as the sentence 'Please Excuse My Dear Aunt Sally' or one of many other variations. Many such acronyms exist in other English speaking countries, where Parentheses may be called Brackets, and Exponentiation may be called Indices or Powers... However, all these mnemonics are misleading if the user is not aware that multiplication and division are of equal precedence, as are addition and subtraction. Using any of the above rules in the order addition first, subtraction afterward would give the wrong answer..."

In my experience, none of the students who learn PEMDAS are aware of the equal-precedence (ties) between the inverse operations of multiplication/division and addition/subtraction. Therefore, they will always get computations wrong when that is at issue. (Maybe prior instructors managed to scrupulously avoid exercises where that cropped up, but I'm not sure how exactly.)

Here's a proper order of operations table for an introductory algebra class. I've taken to repeatedly copying this onto the board almost every night because it's so important, and the PEMDAS has caused so much prior brain damage:

- Parentheses

- Exponents & Radicals

- Multiplication & Division

- Addition & Subtraction

An example I use in class: Simplify 24/3*2. Correct answer: 16 (24/3*2 = 8*2 = 16, left-to-right). Frequently-seen incorrect answer: 4 (24/3*2 = 24/6 = 4, following the faulty PEMDAS implication that multiplying is always done before division).

If you're looking at PEMDAS and not the properly-linked 4-stage order of operations, you miss out on all of the following skills:

(1) You solve an equation by applying inverse operations (i.e., cleaning up one side until you've isolated a variable). If you don't know what operation inverts (cancels) another, then you'll be out of luck, especially with regards to exponents and radicals. Otherwise known as "the re-balancing trick", or in Arabic, "al-jabr".

(2) Operations on powers all follow a downshift-one-operation shortcut. Examples: (x^2)^3 = x^6 (exp->mul), sqrt(x^6)=x^3 (rad->div), x^2*x^3 = x^5 (mul->add), x^5/x^3 = x^2 (div->sub), 3x^2 +5x^2 = 8x^2 (considering a shift below add/sub to be "no operation"). If you don't see that, then you've got to memorize what looks like an overwhelming tome of miscellaneous exponent rules. (And from experience: No one succeeds in doing so.)

(3) Distribution works with any operation applied to an operation one step below. Examples: (x^2*y^3)^2 = x^4*y^6 (exp across mul), (x^2/y^3)^2 = x^4/y^6 (exp across div), 3(x+y) = 3x+3y (mul across add), sqrt(x^2*y^6) = x*y^3 (rad across mul), etc. However, the following cannot be simplified by distribution and are common traps on tests: (x^3+y^3)^2 (exp across add), sqrt(x^6-y^6) (rad across sub), etc.

(4) All commutative operations are on the left, all non-commutative operations are on the right (the way I draw it). Also, any commutative operation applied to zero results in the identity of the operation immediately below it. Examples: x^0 = 1 (the multiplicative identity), x*0 = 0 (the additive identity), x+0 = x (no operation), etc. The first example is usually forgotten/done wrong by introductory algebra students.

(5) The fact that each inverse operation generates a new set of numbers (somewhat historically speaking). Examples: Start with basic counting (the whole numbers). (a) Subtraction generates negatives (the set of integers). (b) Division generates fractions (the set of rationals). (c) Radicals generate roots (part of the greater set of reals).

(6) Finally, per my good friend John S., perhaps the most important oversight of all is that PEMDAS misses the whole big idea of the order of operations: "More powerful operations are done before less powerful operations". I write that on the board, Day 1, even before I present the basic OOP table. It's not a bunch of random disassociated rules, it's one big idea with pretty obvious after-effects. (See John's MySpace blog.)

So as you can see, PEMDAS is like a plague o'er the land, a band of Vandals burning and pillaging students' cultivated abilities to compute, solve equations, simplify powers, and see connections between different operations and sets of numbers. If you see PEMDAS, consider it armed and dangerous. Shoot to kill.

Never-Ending Amazement

So here's a quick observation I've said aloud several times. For me, teaching college math is a continual exploration of the things people don't know. If I listen really carefully, I continually discover that the most basic, fundamental ideas imaginable are things that a lot of people in a community college simply never encountered. Whenever I start teaching a class and think "oh, christ, that's so basic it'll bore everyone to tears, skip over that quickly," I discover at some later point that a good portion of people have never heard of it in their life.

That's actually a good thing. It keeps me interested with this ongoing detective work I do to see exactly how far the unknowns go. And it provides the opportunity to share in the never-ending amazement, through the eyes of a student who never saw something really fundamental.

Here's one from this week (which I got to run in each of two introductory algebra classes). We're going to want to simplify expressions, with variables, even if we don't know what the variables are. ("Simplifying Algebra", I write on the board.) To do that, we can use a few tricks based on the overall global structure of numbers and their operations. I'm about to give 3 separate properties of numbers, here's the first. ("Commutative Property", I write on the board.)

Let's think about addition, say we take two numbers, like 4 and 5. 4 plus 5 is what? ("9", everyone says.) Now, if I reverse the order, and do 5 plus 4, I get what? ("9", everyone says.) Same number. Now, do you think that will work with any two numbers? With complete confidence, almost everyone in a room full of 25 people all say at once, "No, absolutely not."

So of course, I'm sort of thunder-struck by this response. Okay, I say, I gave you an example where it does work out, if you say "no" you need to give me an example where it doesn't work out. One student raises his hand and says "if one is positive and one negative". Okay I say, let's check 1 plus -8 ("-7"). Let's check -8 plus 1 ("-7"). So it does work out. Now do you think it works for any two numbers? At this point I get a split-vote, about half "yes" and half "no".

Okay, what else do you think it won't work for? One student raises her hand and says (and I bless her deeply for this), "if it's the same number". Uh, okay, let's check 5 plus 5 ("10"). And if I flip that around I again have 5 plus 5 ("10"). So now do you think it works for any two numbers?

At rather great length I finally get everyone agreeing "yes" to the Commutative Property of Addition. And it's obviously a point that no one in the class had ever realized before, that addition is perfectly symmetric for all types of numbers. You can sort of see a bit of a stunned look on some people's faces that they hadn't realized that before. Isn't that just incredibly amazing?

After that, we get to think about the Commutative Property of Multiplication (pointing out that commutativity does not work for subtraction or division), look at the Associative and Distributive Properties, do some simplifying exercises with association and distribution, and so on and so forth. But the thing I can't get over this week is that something so simple as flipping around an addition and automatically getting the same answer can, all by itself, be an enormous revelation if you listen closely enough.

2009-02-22

Interpreting Polls: A MadMath Open Letter

An online forum says "Most of our members are not in favor of switching to a new product. We polled 900 members, and only 37% are in favor of switching (margin of error +/-3%)." Orius reads this and responds, "That poll doesn't convince me of anything. This forum has over 74,000 members, so that's only a small fraction of forum members that you polled." Do you agree with Orius' reasoning? Explain why you do or do not agree. Refer to one of our statistical formulas in your explanation.

Here is the best possible answer to that test question:

No, Orius is mistaken. Population size is not a factor in the margin-of-error formula: E = z*σ/√n (z-score from desired confidence level, σ population standard deviation, n sample size).

Now, I make a point to ask a question like this right at the end of my statistics class because it's an enormously common criticism of survey results. It's also enormously flat-out wrong. (In this case, the quotes I used in the test question were copied directly from a discussion thread at gaming site ENWorld from last year).

Two weeks later, I get up on Sunday morning and eat a donut while reading famed technical news site Slashdot. Here's what I get to read in an article summary on the front page:

Adobe claims that its Flash platform reaches '99% of internet viewers,' but a closer look at those statistics suggests it's not exactly all-encompassing. Adobe puts Flash player penetration at 947 million users out of a total 956 million internet-connected devices, but the total number of PCs is based on a forecast made two years ago. What's more, the number of Flash users is based on a questionable internet survey of just 4,600 people — around 0.0005% of the suggested 956,000,000 total. Is it really possible that 99% penetration could have been reached?

Below, I present my open response to this Slashdot summary:

What's more, the number of Flash users is based on a questionable internet survey of just 4,600 people — around 0.0005% of the suggested 956,000,000 total.

That's the single dumbest thing you can say about polling results. I just asked this question on the last test of the statistics class I teach two weeks ago. Neither the population size, nor the sampling fraction (ratio of the population surveyed), are in any way factors in the accuracy of a poll.

Opinion polling margin of error is computed as follows (95% level of confidence): E = 1/sqrt(n) = 1/sqrt(4600) = +/-1%. So from this information alone, the actual percent of Flash users is 95% likely to be somewhere between 98% and 100%. Again, note that population size is not a factor in the formula for margin of error.

As a side note, polling calculations are actually most accurate if you had an infinite population size (that's one of the standard mathematical assumptions in the model). If anything, a complication arises if population size gets too small, at which point a correction formula can be added if the sampling fraction rises over 5% of the population or so.

There might be other legitimate critiques of any poll (like perhaps a biased sampling method). But a small sampling fraction is not one of them. It's about as ignorant a thing as you can say when interpreting poll results (on the order of "the Internet is not a truck").

http://en.wikipedia.org/wiki/Margin_of_error#Effect_of_population_size

Finally, a somewhat more direct statement of the same idea:

This Is The Dumbest Goddamn Thing You Can Say About Statistics

2009-02-19

Oops: Margins of Error

Well, it turns out that was a mistake on my part. As I groggily woke up this morning (the time where most of my best thinking occurs), I realized I'd made a mistake with a hidden assumption that the percentage of people supporting Candidates A and B were independent... when obviously (on reflection) they're not; in the simplest example every vote for A is a vote taken away from B. You can take A's support and directly compute B's support.

So if I do a proper hypothesis test with this understanding (H0: pA = 0.5 versus HA: pA > 0.5), with polling size n=100 and 55% polled support for A (as an example), you get a P-value of P = 0.1587 (significantly higher than the limit of alpha = 0.05 at the 95% confidence level), showing indeed that we cannot reject the null hypothesis.

In short, it turns out that the statement "A and B are within the margin of error, so we can't be sure exactly who is ahead", is actually correct at the same level of confidence as the margin of error was reported. In fact, to extend that result, there will be a window even if A and B are beyond the margin of error where you still can't pass a hypothesis test to conclude who is really ahead. (Visually, intervals formed by the margins-of-error overlap a little bit too much.)

Mea culpa. I removed the erroneous post from yesterday and left this one.

2009-02-13

Basic Teaching Motivation

For the intermediate algebra class that I regularly teach (which is truly an enormous challenge for most of the students I get), I'm considering this very short mission statement: "Can you follow rules? (Can you remember them?)"

(Here's how I might develop this:) When I say that, I don't mean to come off as some kind of control freak. There are both Good and Bad rules in the world. You should take a philosophy course or some kind of ethical training to identify for yourself what rules are Good (and effective, and you should dedicate yourself to following), and what rules are Bad (and you should dedicate yourself to challenging and overthrowing).

But this course is specifically about the skill of, when you're handed a Good rule, do you have the capacity to quickly digest it and remember it and follow it? If you can't do that, then you're not allowed to graduate from college. The purpose is twofold: (1) testing and training in following rules in general, and (2) an introduction to mathematical logic in specific. The first is a requirement before you're expected to be given responsibility in any professional environment. The second gives you a platform to understand principles of mathematics, which are usually the best, most effective, and most powerful rules that we know of.

So, if you can't follow rules, or if you simply can't remember them, it will be frankly impossible to pass a course like this, and you'll get trapped into a cycle of taking this course over and over again without success.

(Honestly, as an aside, I think the primary challenge to students in my intermediate algebra course is simply an incapacity to remember things from day to day. I know now that we can literally end one day with a certain exercise, and have everyone able to do it, and start the very next day with the exact same exercise and have half the classroom unable to do it.)

I conclude, as I've expressed previously before, with a possible epitaph:

I want to foster a sense of justice.

A love of following rules that are good.

A love of destroying rules that are bad.

Division By Zero

One problem that occurs to me now is that, when discussing it extemporaneously, I myself tend to forget that there's two very separate and distinct cases involved: dividing any run-of-the-mill number by zero, versus dividing zero by zero. I think the following is a pretty complete proof:

All variables below are in some set U with multiplication.

Define: Zero (0) as, for all x: 0x = 0.

Define: Division (a/b) as the solution of a = bx (that is, a/b=x iff a=bx).

To prove that division by zero is undefined, let's assume the opposite: there exists some number n such that n/0 = x, that is, n = 0x (def. of division), where x is a unique element of U.

Case 1: Say n≠0. Then n = 0x => n=0 (def. of zero). This is a contradiction that shows this case cannot really occur.

Case 2: Say n=0. So 0 = 0x (def. of division), which is true for all x (def. of zero). Thus x is not a unique number, contradicting our initial assumption.

To summarize: If you try to divide any nonzero number by zero, the answer is "Not A Number". However, if you try to divide zero by zero, the answer is "All Numbers Simultaneously".

(Side note: I guess there's an interesting gap in what I just wrote there, that maybe in Case #2 if the set U just has one single element [zero], then actually you can identify x=0, and do in fact have definable division by zero for that one, degenerate case. Hmm, never noticed that.)

Anyway, that's a fairly intricate amount of basic logic for my intermediate "didn't even know about division by zero" algebra students (2 kind-of-exotic definitions, two cases from an implied "or" statement, and doubled proof by contradiction). So I think what I wind up doing, to give them at least some sense of it, is to consider a single numerical example and gloss over the secondary n=0 case:

Say x = 6/0.

So: x*0 = 6 <-- Multiply both side by zero. Violates rule (for all x: x*0 = 0), so x is not any number (NAN). (Of course, normally you can't multiply both sides of an equation by zero. But you could if division by zero was defined [see degenerate case above, for example] and that actually is the assumption here. It's a sneaky proof-by-contradiction, for one numerical case, without calling it out as such.)

Now, I pick the number "6" above for a very specific reason; if that still leaves someone unconvinced, it gives me the option to do the following. Take six bits of chalk (or torn-up pieces of paper, or whatever), put them in my hand, and write 6/2 = ? on the board. Ask one student to take two pieces out, then another student, and another, until they're all gone. How many students can I do to with before the chalk is gone? That's the answer to the division problem: 3 (obviously enough).

Now take back the chalk and write 6/0 = ? on the board. Ask one student to take zero pieces out, and then another, and another. How many students can I do this to before the chalk is gone? That's the answer to this division problem: Infinitely many, which is (again) not a number defined in our real number system. (If we have a short discussion about infinity at this point, and even if I leave students with the message "I want to say this should be infinity", I think that's okay.)

This is all part of an early lecture on basic operations with 1 and 0, serving to (a) remind students who routinely trip up over them, (b) serve as a foundation for simplifying exercises (why you're expected to simplify x*1 but not x+1), and (c) serve as an example for how much more convenient/fast it is to express rules in algebraic notation, versus regular English. It's also around that time that we have to categorize different sets of numbers, sometimes resulting in the question "what's not in the set of Real numbers?", for which I recommend the examples of infinity (∞) and a negative square root (like √(-4)). So, a lot of those ideas segue together.

Mathematicus Fhtagn

2009-02-10

Dice Distributions

http://www.superdan.net/download/DiceSamples.xls

It provides a nice picture of the evolution of the distribution, as more dice are added, into one that (a) more closely matches a normal curve, as per the Central Limit Theorem, and also (b) gets narrower and narrower, as the standard deviation of the dice average falls. I printed out the first page (n=1 to 3) for my statistics class, in an attempt to intuitively anticipate the CLT.

The other nice thing here is that all the numbers come out of a programmed macro function for summed dice frequency (which I picked up from the Wikipedia article on Dice, and I wanted to see implemented in code): F(s,i,k) = sum n=0 to floor((k-i)/s): (-1)^n * choose(i, n) * choose(k-s*n-1, i-1).

http://en.wikipedia.org/wiki/Dice#Probability

2009-02-08

Sharp-Edged Dice

http://deltasdnd.blogspot.com/2009/02/testing-balanced-die.html

As a follow-up to that, I tried to do a little research on what game manufacturers do for quality assurance on their dice. While I didn't find that specific information, I did find something that absolutely warmed and delighted my heart. It's the long-time owner of the dice-company Gamescience, "Colonel" Lou Zocchi, engaging in a 20-minute rant about how crappy his competitors' dice are, because they engage in a sand-blasting process that rounds off all the edges of their dice in an irregular fashion, and therefore generates slightly incorrect probability distributions. (As an aside, this actually does match my own independent test of his style of dice he produced.)

Lou's retiring this year and selling the company, but he's still got the fire, and I'll give him an official MadMath salute for his rant on dice probabilities. Watch it here:

http://www.gamescience.com/

2009-01-30

Sampling Error Abbreviation?

Can't use "SE" because that's the abbreviation for "standard error", which is a totally different thing (namely, the standard deviation of all possible sampling errors).

Damn it.

2009-01-24

First-Day Statistics

Sampling a Deck of Cards: Let's act as a scientific researcher, and say that somehow we've encountered a standard deck of cards for the first time, and know practically nothing about it. We'd like to get a general idea of the contents of the deck, and for starters we'll estimate the average value (mean) of all the cards. Unfortunately, our research budget doesn't give us time to inspect the whole deck; we only have time to look at a random sample of just 4 cards.

Now, as an aside, let's cheat a bit and think about the structure of a deck of cards (not that our researcher would know any of this). For our purposes we'll let A=1, numbers 2-10 count face value, J=11, Q=12, K=13. We know that this population has size N=52; if you think about it you can derive that the actual mean is μ=7; and I'll just come out and tell the class that I already calculated the standard deviation as σ=3.74. (Again, our researcher probably wouldn't know any of this in advance.)

So granted that we wouldn't really know what μ is, what we're about to do is take a random sample and construct a standard 95% confidence interval for the most likely values it could be. In our case we'll be taking a sample size n=4, calculating the average (sample mean, here denoted x'), and construct our confidence interval. As a further aside, I'll point out that a 95% confidence level can be simplified into what we call a z-score, approximately z=2.

At this point I shuffle the deck, draw the top 4 cards, and look at them.

We take the values of the four cards and average them (for example, the last time I did this I got cards ranked 7, 3, 5, and 4; sample mean x' = 19/4 = 4.75). Then I explain that constructing a confidence interval usually involves taking our sample statistic and adding/subtracting some margin of error, thus: μ ≈ x'±E (again, x' is the "sample mean"; E is the "margin of error"). Then we turn to the formula card for the course and look up, near the end of the course, the fact that for us E = z*σ/√n. We substitute that into our formula and obtain μ ≈ x'±z*σ/√n.

So at this point we know the value of everything on the right side of the estimation, and substitute it all in and simplify (the sample mean x', z=2, σ=3.74, and n=4, all above). The arithmetic here is pretty simple, in this example:

μ ≈ x' ± z*σ/√n

= 4.75 ± 2*3.74/√4

= 4.75 ± 2*3.74/2

= 4.75 ± 3.74

= 1.01 to 8.49

So, there's our confidence interval in this case (95% CI: 1.01 to 8.49). Our researcher's interpretation of that: "There is a 95% chance that the mean value of the entire deck of cards is somewhere between 1.01 and 8.49". That's a pretty good, concentrated estimation for μ on the part of our researcher. And in this case we can step back and ask the question: Is the population mean value actually captured in this interval? Yes (based on our previous cheat), we do in fact know that μ=7, so our researcher has successfully captured where μ is with a sample of only 4 cards out of an entire deck.

That usually goes over quite well in my introductory statistics class.

Backstage -- The Ways In Which I Am Lying: Look, I'm always happy to dramatically simplify a concept if it gets the idea across (in this case, the overall process of inferential statistics, the ultimate goal of my course, as treated in the very first hour of class). Let's be upfront about what I've done here.

The primary thing that I'm abusing is that this formula for margin-of-error, and hence the confidence interval, is usually only valid if the sampling distribution follows a normal curve. There's two ways to obtain that: either (a) the original population is normally distributed, or (b) the sample size is large, triggering the Central Limit Theorem to turn our sampling distribution normal anyway.

Neither of those conditions apply here. The deck of cards has a uniform distribution, not normal (4 cards each in all the ranks A to K). And obviously our sample size n=4, necessary to make the demonstration digestible in the available time, is not remotely a "large enough" sample size for the CLT. But granted that the deck of cards has a uniform distribution, that does help us in it becoming "normal-like" a bit faster than some wack-ass massively skewed population, so the example is still going to work out for us most of the time (see more below).

At the same time, ironically enough, I also have too large of a sample size, in terms of a proportion to the overall population, for the usual margin-of-error formula. Here I'm sampling 4/52 = 7.69% of the population, and if that's more than around 5%, technically we're supposed to use a more complicated formula that corrects for that. Or we could legitimately avoid that if we were sampling with replacement, but we're not doing that, either (re-shuffling the deck after each single card draw is a real drag).

However, even without those technical guarantees, everything does in fact work out for us in this particular example anyway. I wrote a computer program to exhaustively evaluate all the possible samples of size 4 from a deck of cards, and the result is this: What I'm calling a 95% confidence interval above, will actually catch our population mean over 95.7% of the time; so if anything the "cheat" here is that we know the interval has more of a chance of catching μ than we're really admitting.

Some other things that may be obvious are the fact that we're assuming we know the population standard deviation σ in advance, but that's a pretty standard instructional warm-up before dealing with the more realistic case of unknown σ. And of course I've approximated the z-score for a 95% CI as z=2, when more accurately it's z=1.960 -- but you'll notice above that using z=2 magically cancels with the factor √n = √4 = 2 in the denominator of our formula, thus nicely abbreviating the number-crunching.

The other thing that might happen when you run this demonstration is there's a possibility of generating an interval with a negative endpoint (even while catching μ inside), which would be ugly and might warrant some grief from certain students (e.g., if x'=3.5, then the interval is -0.24 to 7.24). Nontheless, the numerical examination shows that there's a 94.8% chance of getting what I'd call a "good result" for the presentation -- both catching μ and avoiding any negative endpoint.

At first I considered a sample size of n=3, which would shorten the card-drawing part of the demonstration; this still results in (numerically exhausted) 95.4% chance to catch μ in the resulting interval. Alternatively, you might consider n=5, which guarantees avoidance of any negatives in the interval. In both those cases you lose the cancellation with the z-score, so there would be more calculator number-crunching involved if you did it that way.

Finally, I know that someone could technically dispute my interpretation of what a confidence interval means above as being incompatible with the frequentist interpretation of probability. But I've decided to emphasize this version in my classes, because it's at least comprehensible to both me and my students. I figure you can call me a Bayesian and we'll call it a day.

2009-01-22

More Topology Explaining

I was at a presentation about a year ago, where someone tried to explain basic topology concepts to non-mathematicians. Here's they went about it: "Consider a cube of cheese and a donut," they said. "They are different shapes. If you draw a small circle on the surface of the cube of cheese, it can be shrunk down to a point. If you draw a circle on the surface of the donut the right way, it cannot be shrunk down to a point. Strange but true."

I almost fell out of my chair when I heard that explanation.

There's a whole slew of things wrong with explanation: (1) Why a "cube" of cheese? That's only going to serve to confuse people into thinking that the geometric "cube" shape is somehow important to the description, when it's not. Again, the only important thing is that one has a hole and the other doesn't. Use some kind of curved shape to avoid tricking people into thinking that the square-ness has anything to do with what you're explaining. (2) Why "drawing a circle"? Yes, as mathematicians we know that's one way of visualizing the important Poincaré conjecture, but here we have to look at it from the perspective of the non-expert listener. Drawings of things don't shrink and expand, so that only promotes further confusion. Use something from daily life that naturally expands and contracts for your analogy. (3) How the heck would anyone accomplish "drawing on a cube of cheese" in the first place?

Here's how I would explain this.

"Consider an orange and a donut. In topology, the only important difference in their shapes is that one has a hole and the other doesn't. Here's how a mathematician would demonstrate that: With the orange, if you wrap a rubber band around it, you can always flick the rubber band aside so it falls off. With the donut, there's a way to connect a rubber band through the hole-in-the-middle part so there's no way to just flick it off. (You'd have to cut & glue the rubber band back together, but then it would be always hang onto the donut.) Doing this mathematically is one way to detect exactly which shapes have holes in them."

2009-01-21

Explaining Topology

You know, every time someone gives an elementary description of Topology (a branch of modern mathematics), there's a very standard explanation of it, and I think it's a very, very bad one. They always say something complicated like this (from http://www.sciencenews.org/articles/20071222/bob11.asp ):

Huh? What the hell does that mean? You start off saying it's about shapes, then start talking in the negative by saying it's not about a bunch of particular properties of shapes. Then there are two pretty poor examples (asking people to imagine stretching things where bulky parts become very thin pieces; it's unclear what corresponds to what). I've taken a full year in graduate Topology, and sometimes I still have trouble understanding that description. Worst of all is this -- that's not what is really important about Topology studies. No one is ever really interested in stretching anything in a topology course.Topology studies shapes. Specifically, it studies shapes' properties that are not affected by stretching, moving, twisting, or pulling—anything that doesn't break up the object or fuse some of its parts. The proverbial example is that, to a topologist, a coffee mug is the same as a doughnut. In your imagination, you can squash the mug into a doughnut shape, and it will retain the property of having a hole, namely its handle. A sphere is different. You can stretch a sphere into a stick and bend the stick so its ends touch. But turning that open ring into a doughnut will involve fusing the ends, and that's forbidden.

Here's what I say in the classes I teach: Topology is the study of connections. That's the real story; it's very simple. Yes, coffee cups and donuts are similar topologically, because they're both connected bodies with one hole through each of them. But topology is really useful for things like the following -- A road engineer categorizes intersections by how many streets meet there. A miniature figure modeller plans how complicated an item they can sculpt, knowing the resulting mold has to stay connected around their figure. A stencil-maker has to make stencils one way for letters that have holes in them, and another way for those that don't (e.g., cut out an "A", "B", or "D" normally from paper and those middle holes get disconnected and fall out; that's not a problem for letters like "C", "E", or "F", which keep the surrounding paper connected.) A subway-rider looks for the easiest route to an evening out on the town, knowing they're restricted to specific connecting trains at specific stations. A traveling salesman wants to plan the fastest, cheapest sales trip between a dozen cities, using available commercial connecting flights; or, my food delivery service wants to do the same thing with intersecting city streets.

These are all Topological problems, dealing with how things are connected (which might be solid shapes, but is even more likely to be cords, knots, network circuits, or car/plane/train paths). I suspect I know why most explainers use the big-complicated-useless explanation, instead of the short-simple-and-effective one -- when categorizing different shapes, mathematicians do utilize functions called "homeomorphisms", which somebody at some point thought was best visualized as "stretching" operations. But, seriously, nobody who's nontechnical is going to care about that technique (no more than say, people care about how completing-the-square is used to develop the quadratic formula).

The point of all that technical work in Topology is, again, pretty simple: How is this shape connected? And hence: Where can I go today with this shape? That should be the focus of our first introductions to Topology, I think, not the damn "stretching" analogy, which is practically a cancer on our attempts to explain the subject.

2009-01-18

MadMath Manifesto

"Look at it this way. When I read a math paper it's no different than a musician reading a score. In each case the pleasure comes from the play of patterns, the harmonics and contrasts... The essential thing about mathematics is that it gives esthetic pleasure without coming through the senses." (Rudy Rucker, A New Golden Age)

"'I find herein a wonderful beauty,' he told Pandelume. 'This is no science, this is art, where equations fall away to elements like resolving chords, and where always prevails a symmetry either explicit or multiplex, but always of a crystalline serenity.'" (Jack Vance, The Dying Earth)

The preceding dialogues are both from works of fiction. That being said, they may in fact truly represent how the majority of mathematicians experience their work. For example, Rudy Rucker is himself a retired professor of mathematics and computers (as well as a science fiction author). My own instructor of advanced statistics would end every proof with the heartfelt words, "And that's the beauty."

I've heard that kind of sentiment a lot. But I never experienced mathematics that way. I now have a graduate degree in mathematics and statistics, and currently teach full-time as a lecturer of college mathematics, and these kinds of declarations still mystify me. Math has never felt "beautiful" or "poetic". I would never in a million years think to describe math as "pleasurable" or "serene".

Math drives me mad.

My experience of mathematics is this: Math is a battle. It may be necessary, it may be demanding, it may even be heroic. But the existential reality is that if you're doing math, you've got a problem. You very literally have a problem, something that is bringing your useful work to a halt, a problem that needs solving. And personally, I don't like problems; I am not fond of them; I wish they were not there. I want them to be gone, eradicated, and out of my way. I don't like puzzles; I want solutions. And once you have a solution, then you're not doing math anymore. So the process of mathematics is an experience in infuriation.

So, again: Math is a battle. It is a battle that feels like it must be fought. It can feel like a violent addiction; hours and days and nights disappearing into a mental blackness, unable to track the time or bodily needs. Becoming aware again at the very edge of exhaustion, hunger, filth, and collapse.

At worst, math can feel like a horrible life-or-death struggle, clawing messily in the midst of muddy, bloody, poisonous trenches. At best, it may feel like an elegant martial-arts move, managing to use the enemy's weight against itself, to its destruction.

I love seeing a powerful new mathematical theorem. But not because it "gives esthetic pleasure"; I have yet to see that. Rather, because a powerful theorem is the mathematical equivalent to "Nuke it from orbit – It's the only way to be sure". A compelling philosophy.

On the day that you really need math it will be a high-explosive, demolishing the barrier between you and where you want to go. Is there a pleasure in that? Perhaps, but not from the "play of patterns, the harmonics and contrasts". Rather, it's because blowing up things is cool. Like at a monster-truck rally, crushing cars is cool. Math may not be beautiful or fun for us, but it is powerful, and that's what we need from it.

Of course, I also don't know how to a read a music score, so I'm similarly mystified if that's the operating analogy for most mathematicians. Perhaps I'm missing something essential, but I have to stay true to my own experience. If math is going to be useful or worthwhile then it must literally rock you in some way, relieve an unbearable tension, and change your perception of what is possible.

And so, the battle continues.